The Audi Aicon Self-Driving Concept Car

Addenda and Correspondence (click here)

Self-Driving cars (SDCs) will be the most important drivers of machine vision, neural network architectures, and AI processor hardware over the 2020s and 2030s. The case boils down to money and technology acceleration. Money (hundreds of billions of dollars in R&D) will be invested in the 2020s. That money will translate into a hundred thousand engineering jobs worldwide as companies and countries in Asia and the West compete for dominance. This article is a heads-up display of that thesis and its ramifications.

But first, why am I (an MD, PhD) writing about self-driving cars (SDCs) and what do I bring to the autonomous racetrack? Since my days as a math/cog sci undergrad at MIT in the 1960s, I've been interested in two questions:

(And, might a "conscious, subjective world" be a necessity for human level intelligence, for machine intelligence, in general, and for self-driving cars, in particular. The issue couldn't be more timely. (It underpinned neural net star Professor Yoshua Bengio's 2019 keynote at NeurIPS, which had 13,000 attendees.)

How the brain produces consciousness will eventually be elucidated by the world's thousands of neuroscience and cognitive psychology labs. (The Society for Neuroscience alone has 37,000 members. My own closest affiliation is at Stanford's Neurosciences Institute, just rechristened the Wu Tsai Neurosciences Institute. Stanford's neuroscientists will be moving into their new $250 million dollar building in the next few months. My (popular press) articles on the neuroscience of consciousness appear here and here and here.

But, this article focuses on steps toward creating a "subjective" world model inside a machine, namely a self-driving car (an SDC.) Note: AI engineers themselves don't use this anthropomorphic language. Nonetheless, their work is a first step in this direction, as I detailed here.

SDCs are all around me in Silicon Valley as I ride around on my electric bike from Stanford. Google's Waymo cars were here first (always with safety drivers.) Our electric utility PG&E just laid the 12,000 volt power lines under my street for Tesla's supercharging stations off Sand Hill Road. That's where millions of dollars are being pumped into SDC startups from VCs like Andreessen Horowitz, Sequoia, and Kleiner Perkins. (The HQs of Tesla, Waymo, and Aurora are all an easy bike ride from here.) I regularly hear our grad students and visiting faculty present their work in machine vision and neural network AI.

So, in this article I'll try to distill this heady atmosphere into the essence of future sensor tech, GPU hardware, relevant AI, and new venture capital investments. The cars aren't conscious yet, but when they're fully autonomous, they may've achieved the first step.

But, everybody's first question is ...

I won't keep you in suspense. Widespread rollout is at least a decade away for fully autonomous vehicles (AVs with no steering wheel.) It's not fifty years away and probably not thirty either. (But, MIT Prof. / iRobot (Roomba) founder and well-known AI pessimist Rod Brooks dissents.)

The brief answer is money— hundreds of billions of dollars worth. Basically every traditional car company, many Tier 1 suppliers, and hundreds of startups are pouring billions into the relevant R&D. The work is worldwide — not only in North America, but also in Europe, Israel, China (especially Taiwan,) and in South Korea. The solution is worth trillions of dollars per year. That translates into perhaps a hundred thousand engineers competing worldwide. (Details below: keep reading.)

Human beings by age six (and even much younger) bring to the world two essential skills:

1) intuitive physics and 2) intuitive psychology. (And, both rely on high resolution vision.)

Intuitive physics means common sense notions of how the physical world works. You drop things or knock them over, and they fall down. Some things you can shove out of the way and some things are too heavy. Hit something hard enough, and it breaks. Water splashes; bricks don't.

Intuitive psychology means common sense notions of what other people (and animals) want and do and feel. That guy is trying to cross the street. That child chasing the ball is not paying attention. That policeman wants me to stop. That speeding car is in a rush. Collisions hurt and are costly.

The lack of common sense notions of physics and psychology in machines (and their lack of human-like visual perception) is the essential problem.

In this must-see 2018 YouTube MIT Prof. Josh Tenenbaum explains why multi-layer ConvNets, so called "Deep AI," are only part of the solution. He beautifully shows what two year old kids can do that neural nets alone can't.

Now, some details ...

This is well-covered in the popular literature, but here are the relevant facts. I'll break it down into two, maybe three big categories. First is ADAS or advanced driver assistance systems, designed to increase the safety of the human driver. Examples are automated cruise control, automated parking, emergency braking, and lane keeping. A more advanced example is what Tesla Autopilot now offers: automated everything, but meant to be used only with extreme caution with hands close to the steering wheel. This is called Level 2 or L2 (driver assistance.) I won't cover Level 2 or Level 3 tech here. It's a stepping stone, but really it's its own thing. A big problem is that drivers can be seduced into thinking the car is more capable than it really is ... until they crash.

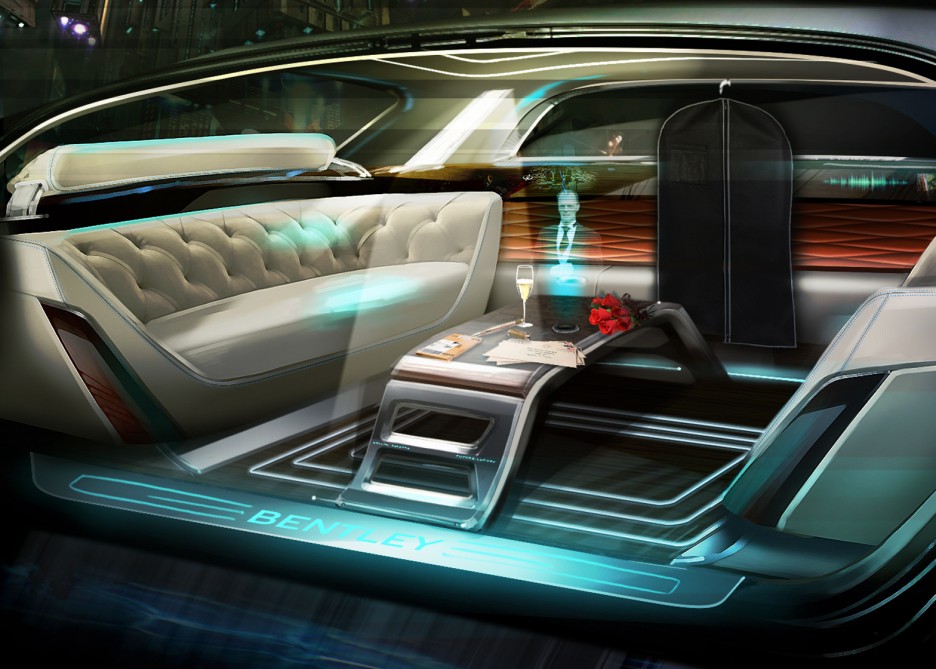

What I'm really focused on is Level 4 and Level 5 autonomous driving (fully automated systems for AVs (automated vehicles.) Level 5 is easiest to describe. Throw away the steering wheel! You can sleep as the car itself drives through busy city traffic all on its own. Level 4 is the same thing except within a greatly limited (geofenced) safe area: big suburban streets, nice weather, low traffic, low speed, no weird situations (like construction zones, eg.)

Level 5 is at least ten years off (but not more than twenty — that's my guess.) Level 4 is happening right now by Alphabet's (Google's) Waymo cars in Phoenix, Arizona. You book a ride on your phone through Lyft; a driverless car (with NO safety driver) shows up and takes you to your (highly constrained, geofenced) destination. Lyft is also offering self-driving rides in Las Vegas (powered by Aptiv and always with a safety driver.)

In the next year or two Waymo will be expanding this taxi service to several more suburban areas and to many more passengers (but not to the general public.) That's how Level 4 will expand ... gradually into more challenging weather and busier traffic.

Where human consciousness/ intelligence is really needed is for exactly these common, but tricky situations. You're not going to be snoozing if you're an American driving a rental car in Rome or New Delhi or Tokyo. Even millions of miles of training data aren't enough. In this superb interview Sebastian Thrun (the founder of Waymo) emphasizes this ... even reacting correctly to 99% of long tail events means you crash only once a week.

That brings us to teleoperation and to simulation.

One thing that makes Level 4 (L4) driving feasible now is ... remote control! Many of the companies that plan to offer L4 taxi service will make use of a remote control operator that can be engaged automatically by the car (or by a passenger using a big red button: "Help, we're stuck!")

For example, check out Designated Driver, and ghostly competitor Phantom Auto. When the L4 or L5 car gets stuck, it can summon a human driver sitting possibly thousands of miles away to take the wheel remotely and do whatever is necessary — pull over, steer around an obstacle, obey a traffic cop, etc. Designated Driver has gotten their latencies down to less than 100 milliseconds despite using just conventional 4G (LTE) wireless.

TeleOperation is obviously a driving mode that will be greatly helped by 5G gigabit per second wireless to bring latencies for remote control down below 10 milliseconds. 5G is rolling out now. The slowest part of the control loop will still be the remote human operator (same reason that L2/L3 and ADAS is a different ballgame than Level 4 or 5.)

Waymo advertises they've done ten million miles of actual driving and ten billion miles in simulation. That simulation is crucial. 100 kilometers of driving on a Kansas freeway is much easier than 100 meters through an Italian traffic circle.

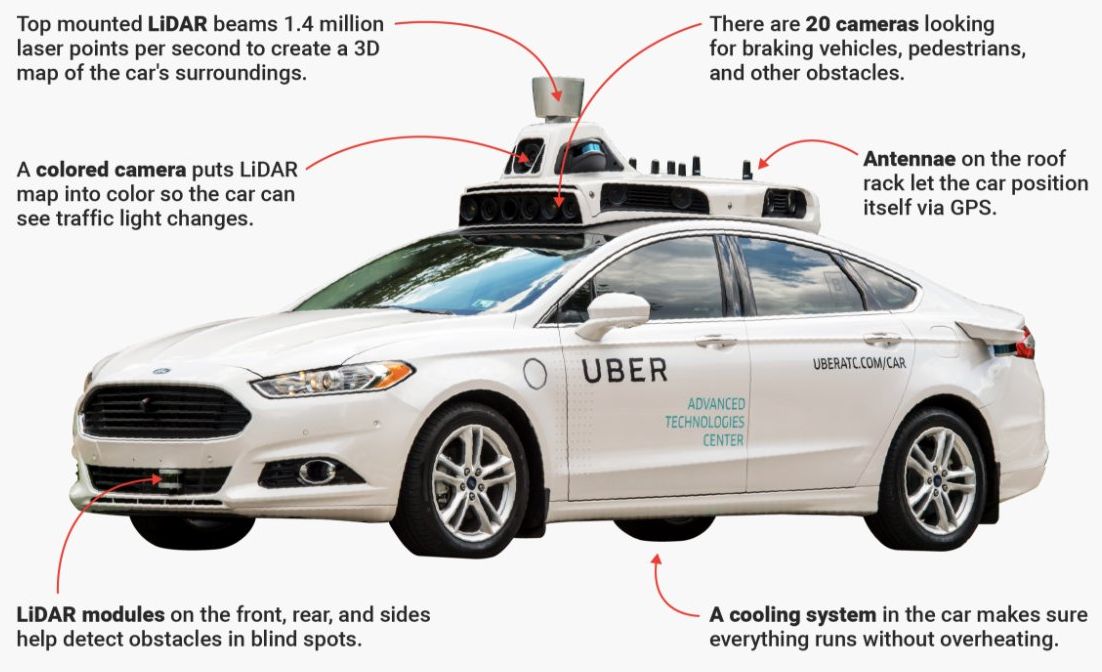

Using simulation, the driving software stack can be continually trained and retested using thousands of the corner cases (the edge cases of the long tail above) that prevent L4s from becoming L5 drivers. In this video we see the crucial role that simulation in the cloud plays in the design and test cycle at Uber ATG.

Simulation is its own field and subindustry. Some companies are devoted exclusively to the problem of converting recorded sensor data from the car, eg at a busy intersection, into simulations that can be reused with new sensor suites and new algorithms. Here is Tesla's December 2019 patent related to streamlining the use of captured sensor data for neural net training.

There's another aspect of simulation that's even more of interest — real-time simulation. As the autonomous car goes down the road it often must predict the likely trajectories of other cars, pedestrians, and salient physical objects in the unfolding scene. At the end of this interview of autonomous guru, Aurora CEO Chris Urmson, Lex Fridman asks him for the key to the self-driving puzzle. His answer: predicting the trajectories of agents and objects over the next five seconds. This is a crucial capability that human drivers (roughly speaking) bring to the car that combines intuitive psychology and intuitive physics. If a collison seems possible, we slow down in proportion to some loss function integrated over possible trajectories. (Race drivers are also in a tight control loop with their own cars. Stanford's new bleeding edge autonomous DeLorean also does it.)

People use their common sense, causal knowledge of physical objects and other agents to analyze in real-time an endless variety of novel scenarios they encounter. That's a reminder to see MIT Prof. Josh Tenenbaum's YouTube. Causal inference is a major focus of current AI work and was at the heart of my own Stanford AI/ML research (the RX Project) from 1976 to 1986.

Level 4 cars may require common sense causal knowledge before graduating to full Level 5.

AGI is artificial general intelligence. Another term for it is human-level artificial intelligence (HLAI.) I'll use AGI as a synonym for HLAI (although in my view real AGI could far exceed HLAI. But, even HLAI takes in a lot of ground.

Some people are capable of doing Nobel Prize winning science or writing Pulitzer Prize winning novels or juggling chainsaws or playing violin concertos. Clearly that level of mastery is not required to drive a car. That's why we let almost all sixteen year olds do it after minimal tests of competence.

Whatever predictions we make for the future arrival of AGI/HLAI, L5 SDCs will be here decades before. I think 2040 is a good upper bound for L5 SDCs, and that's a strong lower bound for the arrival of AGI/HLAI.

Here are two recent, detailed academic surveys of technologies used in autonomous vehicles: Yurtsever, et al. 2019 and Badue, et al. 2019. Their bottom line is that further advancement in AI and perceptual algorithms will be necessary before L5 SDCs are let loose.

Some writers appear to be surprised that SDCs haven't arrived already. But honestly, how could you possibly believe that L5 would be here by now? (That's the same crowd that was disappointed that cancer wasn't cured by 2005 when the human genome was sequenced in 2004.)

I give Elon Musk a pass; he's just promoting Tesla sales — a worthy goal. (He and his head of AI (former Stanford AI Prof. Andrej Karpathy) certainly know the truth. Here's Karpathy's summaries of the most recent 100,000 articles on machine vision and neural nets. This research is still in the top of the first inning.)

The hype surrounding AI and "deep learning" has been in hyperdrive recently. My favorite critics of the exaggerated claims of AI's vaunted status are MIT Prof. Rodney Brooks (see his 2017 MIT Tech Review Seven Deadly Sins piece,) Prof. Gary Marcus (eg Gary's 2019 debate with Yoshua Bengio at MILA), Prof. Douglas Hofstadter (see his 2018 Atlantic article,) and particularly Prof. Joshua Tenenbaum (see his 2018 Building Machines that See YouTube, previously cited.)

Many of AI's recent headlines are well-deserved — notably those from the teams at Deep Mind, Google Brain, and OpenAI. My optimism for Level 5 SDCs rests on the pace of those accomplishments and most particularly on the resources being brought to bear.

As a rough estimate, my guess is that hundreds of billion dollars will come into SDC related fields in the 2020s. Let's call it an even $200 billion worldwide and divide by, say, $200,000 per year for an engineer. That's $20 billion per year, divided by $200,000 per engineer = 100,000 engineers working the SDC problem and related R&D on sensors, hardware, and AI. (And, there is competition (and sharing) at every level: researchers, groups, companies, and countries.) Do you think the L5 SDC problem will be cracked by 2040? I do. Here's what those billions will buy.

Look, for example, at a list of employees sought by SDC top contender Aurora: 42 positions in hardware alone, and dozens more in software and operations. Here's a similar list at Waymo. There are already tens of thousands of engineers working the SDC problem from every angle. The demand for machine learning engineers has grown by 344% in the past five years.

This has been well covered by others, notably by this widely cited paper from Brookings in October, 2017. As they state, it's hard to sum it all up, because the big car companies (all of whom are involved) and their Tier One suppliers, don't usually break out their SDC R&D expenses. Nonetheless, their bottom line was that...

Eighty billion dollars had been invested from 2014 through Q3 of 2017! That included forty six billion in 2015 alone for R&D. Their conclusion was that more than $80 billion would be invested in 2018 and every year beyond that.

This list from December, 2019 shows more recent corporate and venture capital activity. At the top was $3.4 billion in funding for Cruise Automation, acquired by GM in 2016. Here, TechCrunch provides a 2020 update on Cruise's $7.25 billion war chest.

Aurora, one of my home town favorites, had $530M invested as of early 2019.) Another of my favorite home town companies is Argo AI, which partners with Ford and VW. It already has 500 employees, but it's looking for hundreds more.

Recent investments by Google/ Alphabet in their subsidiary Waymo are not broken out. But Waymo is said to contribute $100B to Alphabet's total market cap. Separately, Zoox got $290M in funding; Pony.AI got $264M ; Nauto: $174M ; Preferred Networks: $130M; and so on.

Lists like this one from Angel.co only add up VC money going into startups. That's to say nothing of intracorporate spending on R&D or on related fields like 5G telecom or AI GPUs and TPUs. The buzz in December, 2019 was that Intel had just bought AI hardware startup Habana for $2 billion.

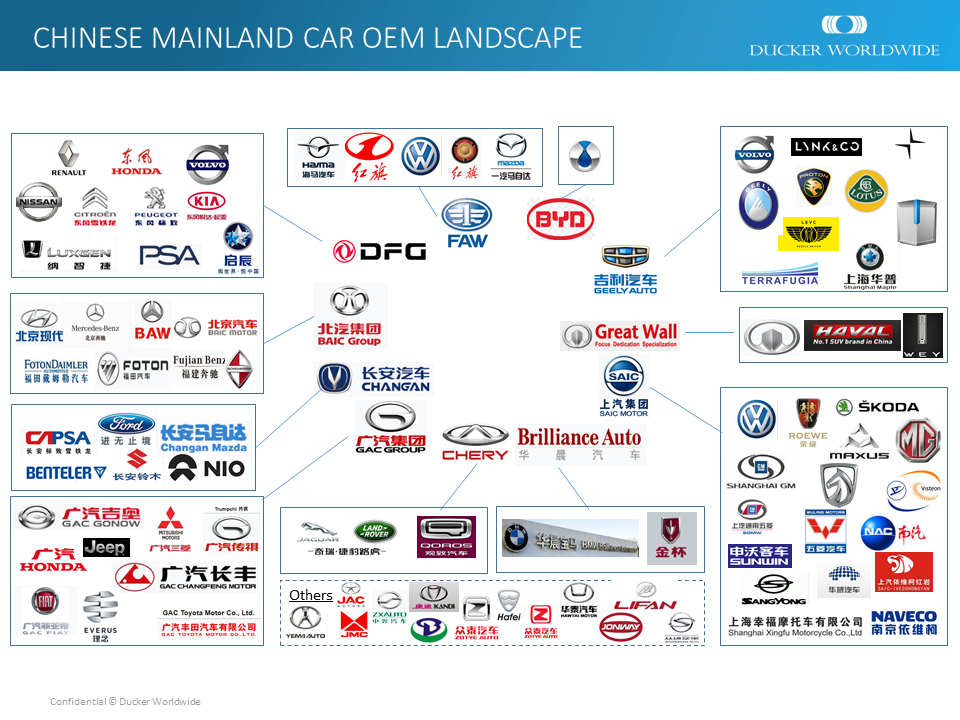

Meanwhile, development of autonomous vehicles in China is rapidly accelerating.

The answer in 2020 is not very. I'll break it down into two categories: 1) perceiving and understanding static images like photos and 2) perceiving and understanding videos (fast sequences of frames.)

I've heard hundreds of talks at Stanford on both machine vision and brain-based vision. The state of the art of both has made great recent strides but is just getting off the ground.

Take a good look at this amusing photo of former President Barack Obama. (This example is from Prof. Josh Tenenbaum's lecture at MIT.)

You can figure out the joke — your robot can't.

This photo requires both intuitive physics and intuitive psychology to get the joke. The guy with the clipboard is weighing himself. Unbeknowst to him, Barack has his foot on the scale. The guy can't believe he weighs that much. Obama's entourage gets the joke (but an observing robot in 2020 would be clueless!)

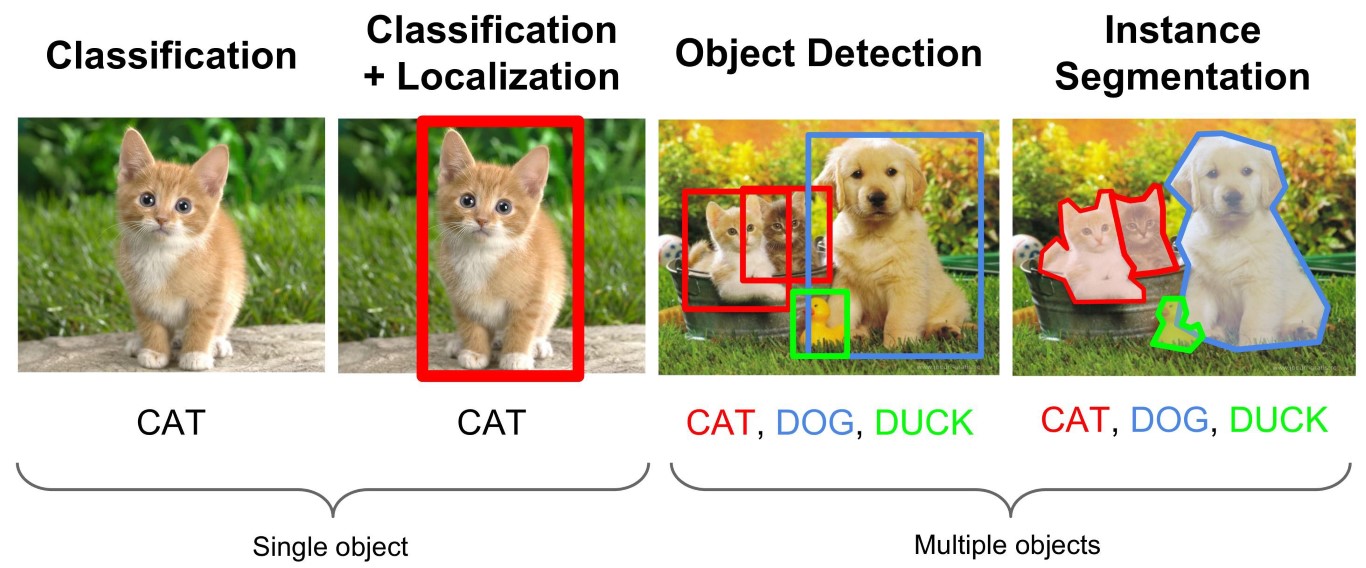

Even static images like this are beyond the capabilities of present day machine vision. We not only get the joke, but we see the texture of the suits, the pattern of the tiles, the relative positions of all the objects in the locker room, etc. The next illustration shows some of the tasks that present day machine vision can accomplish.

I won't attempt to summarize all of recent work in machine vision. But, I'll mention two crucial landmarks. First was the 2010 development at Stanford of the ImageNet database of about a million annotated images falling into a thousand categories (eg deer versus gazelle.) (The work was done by my upstairs neighbor Prof. Olga Russakovsky in the lab of her thesis advisor, the visionary Prof. Fei-Fei Li.)

Second, was the huge leap in ImageNet categorization accuracy made in 2012 by AlexNet reported in this paper: Krizhevsky, Sutskever and Hinton (cited 53,000 times!) By using "deep" convolutional neural nets (CNNs) the field of machine vision was put into hyperdrive.

Present day multilayer CNNs exceed even human performance on the static images of ImageNet. And yet, as capable as they are, they're really just image classifiers: eg dogs versus cats. Humans are capable of far more.

As we drive down the road and encounter challenging situations, we resort to what psychologist Daniel Kahneman calls System II cognition (thoughtful, deliberate reasoning, and planning.) This is beyond the current state of the art of AI and is what is discussed in this 2019 talk by Prof. Yoshua Bengio at NeurIPS .

Bengio is envisioning systems that abstract the objects of their "subjective" worlds from CNNs, but that can then use those objects to do causal reasoning, grounded in detailed images.

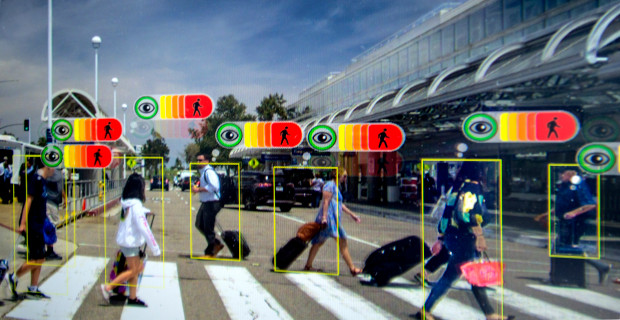

Perceptive Automata's pedestrian thought bubbles

By betting on L5 SDCs to arrive by 2040, I'm implicitly betting that this AI research will have matured such that it can subserve autonomous driving. This will involve systems that deal in real-time with possible dangers five seconds into the future and can calculate complex tradeoffs. An early hint at such a system can be seen in Perceptive Automata's website and videos. Perceptive labels imaged pedestrians with "thought bubbles." Is that pedestrian paying attention to me (the SDC) and is she now intending to cross the street?

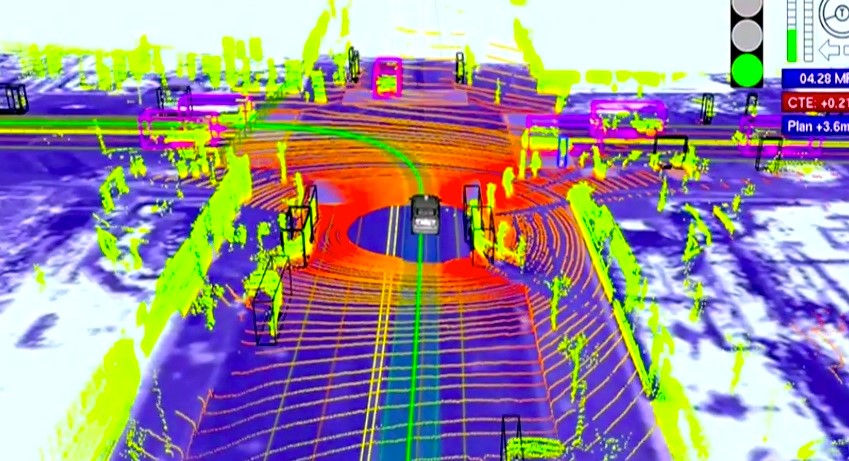

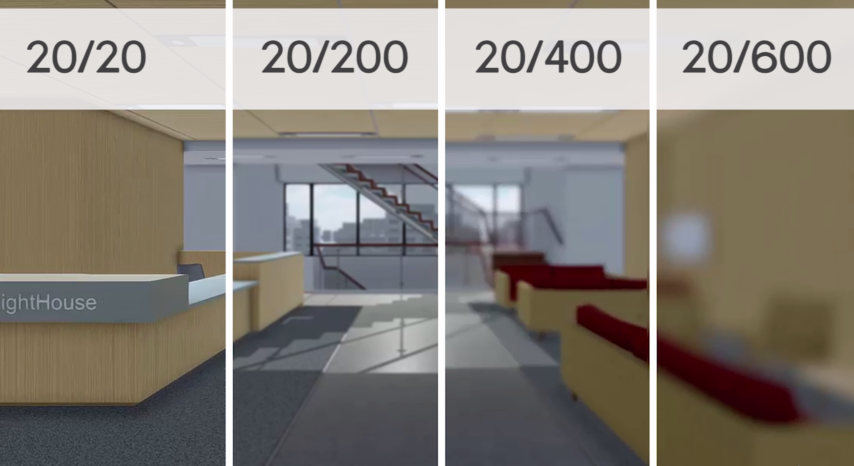

Take a look at any YouTube (eg Chris Urmson's) showing what an SDC sees as it drives down the street. You'll see bounding boxes (like moving coffins) surrounding pedestrians, cyclists, and other cars. This is like having 20:200 vision or worse (what I see at 200 feet, the car sees at 20 feet.) Obviously, you would flunk the vision test at the DMV with vision like that (not to mention killing people on the road.) Here's what the world looks like with 20:200 vision.

As we look at one another we see a richly textured human being with facial expressions, poses, and gestures we've learning to recognize from childhood. The visual world is infinitely richer than a world with just bounding boxes. Interpreting that visual world occupies a full third of our brains (and trillions of synapses.) That's the world that Tesla AutoPilot is trying to compute. Unfortunately, it's way beyond the state of the art in 2020.

Evolutionary theorist, Prof. Donald Hoffman in this TED talk makes the point (well accepted by cognitive scientists, if not the general public) that what we take to be the real world is, in fact, not. Rather, our conscious world is a carefully constructed approximate model of whatever is out there, mainly useful for passing on our genes... the end.

According to that view, the vast distinction between the point clouds of machine vision and the patterns of neuronal spikes from our optic nerves would seem to diminish. Yes, but are the SDCs actually experiencing anything? The answer in the year 2020 is arguably "no." But, I expect that distinction to blur in the coming decades, as the sensors, perceptual hardware, and algorithms improve and control loops tighten.

As was the case in the animal kingdom, evolution of machine vision will be driven by competition (and also by the sharing of winning designs.)

I love watching YouTubes from SDC industry conferences like AutoSens. Every year brings new capabilities.

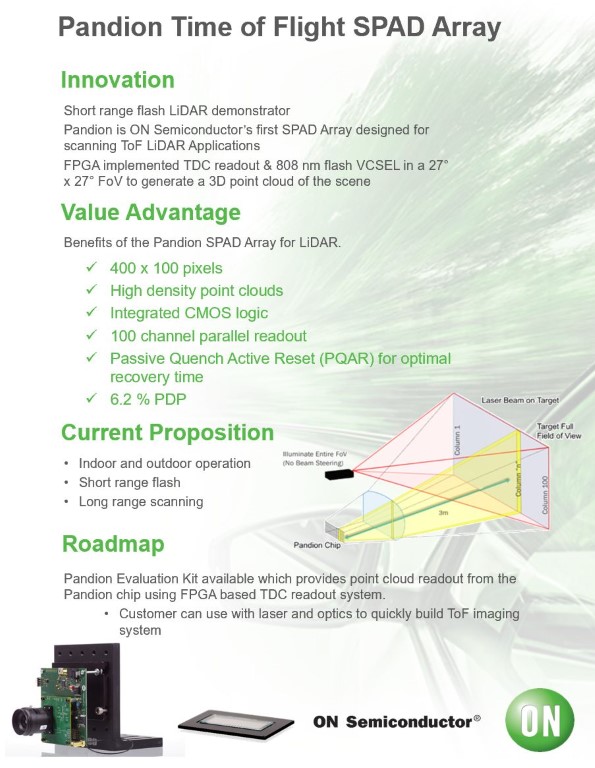

Watch Wade Appelman's talk from AutoSens 2019 (he's head of LiDAR at ON Semiconductor.) He estimates there are seventy separate LiDAR R&D programs at car companies, Tier Ones, and startups. You may've heard of Velodyne and Waymo, but how about Valeo, Leosphere, Riegl, Quanergy, Autoliv, Psys, Ouster, Innoviz, Cepton, and Omron. See ON Semi's new Pandion SPAD array LiDAR (100 by 400 pixel, flash, time of flight.) The total addressable LiDAR market for 2025 is about six billion dollars.

The pace of innovation in LiDAR is stunning. The detectors have increased their sensitivity by ten fold over the past decade (to 12% of incident IR.) And costs have dropped a hundred fold. The old $75,000 rotating Velodynes will soon be obsolete. The new arrays are solid state CMOS and can raster scan or flash or beam steer. They use single photon avalanche diodes or silicon based photomultipliers.

New solid-state LiDARs were shining brightly at the 2020 Consumer Electronics Show (CES) in Las Vegas including Velodyne's new $100 Velabit.

Each of your eyes brings in about a gigabit per second of data. The sensors on an autonomous vehicle (AV) will bring in at least that. One of the crucial features of human vision is that we saccade (move) our eyes about four times per second. That way our foveal (sharpest) vision is always taking in the most relevant field of view (only the central five degrees.) This has the added advantage that it reduces the processing requirements of the visual cortex.

That same trick is just now being exploited in AV sensor suites. It's an advantage if you can focus your upsteam processing on the child in the road rather than the leaves on every tree.

See Ronny Cohen's AutoSens talk (CEO of VayaVision.) VayaVision upsamples LiDAR data to get (what he calls) RGBd data — the distance to every single pixel in a camera's image by fusing LiDAR and video using smart interpolation. It's a crucial trick - something like what we do with binocular vision - getting the distance to every pixel. (That was the message of those psychedelic autosterograms from the sixties.) You want to do your sensory fusion as early as possible in the stream (not during downstream cognition.) VayaVision can detect a skateboard in the road at 32 meters. Nvidia's Drive Labs is also hard at work on fusing LiDAR and camera images.

By restricting field of view, future sensors should be able to achieve the fast refresh rates and sub 0.1 degree resolution that may be required to read body postures, hand gestures, and maybe even facial expressions while cruising through an intersection.

At Tesla's Autonomy Day for SDC analysts in April 2019, Elon made a notorious declaration. "LiDAR is an expensive appendix ... a loser's game; having several is just ridiculous!" Musk's statement (comparing LiDAR to a useless appendage that just exists for surgeons to cut out) is controversial — to say the least. But is it true? (His head of Tesla AutoPilot, the formidable Andrej Karpathy, seems to agree and he knows the literature. (Stanford has to pay up to keep its machine vision professors (unsuccessfuly in Andrej's case) from migrating to industry.) See Karpathy's summaries of 100,000 recent papers on machine learning/ vision from ArXiv.)

As Elon points out, a human drives just fine without a laser firing out of his head. We use binocular vision (fusing the image from our two eyes) to estimate depth. And, we do it at the level of individual pixels. (We also use several monocular cues like motion parallax.)

My view is that in a decade or so Musk may be proven right, if and when camera-mediated stereopsis has reached human level maturity. But that is not the case now. At the moment LiDAR is an indispensible element in calculating distances to objects (and hence in increasing the reliability of bounding boxes.) (But this 2019 research at Cornell may pave the way to eliminating the need for LiDAR. See arXiv pdf from Kilian Weinberger's group.)

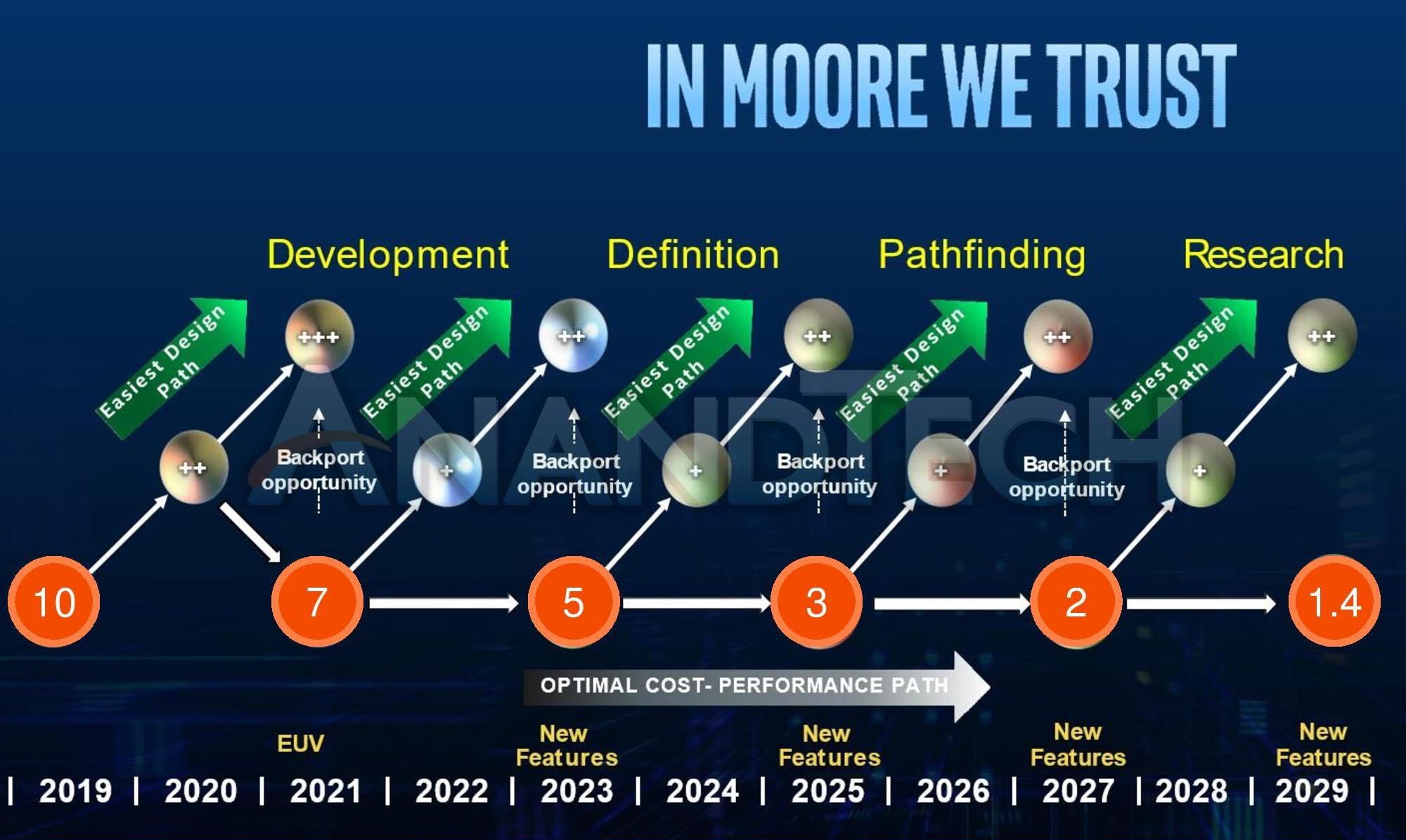

Intel in a race with Taiwan-Semi (orange circles show node sizes in nanometers)

While sensor tech will be racing ahead, CPU development will not be standing still. Above is a recent slide from Intel showing projected nodes from 2020 to 2030. I think it's merely aspirational given Intel's delays with 10 nm. But, as EUV lithography emerges from its adolescence, this chart may gain credibility.

As sensor solutions converge, it seems likely that processes that are now done in present day CPUs (including GPUs and TPUs) will be pushed toward the edge (toward the incoming sensor data.) That will free central processors to concentrate on the crucial AI tasks (intuitive psychology and physics, reasoning, and planning,) once a 3d visual world has been segmented, localized, and classified into objects of importance.

If you're a Moore's Law enthusiast, IEEE's Hot Chips is the place to be on campus in August. (I've attended a few times, when I'm not backpacking.) The 2019 roster included several must-see talks. Don't miss the talks (now on YouTube) by Stanford Prof. Phil Wong (also a VP at Taiwan-Semi — text and slides for his talk are here) and by Lisa Su (CEO of AMD.) In this related inspiring talk at Nvidia's GTC 2019 conference, Jensen Huang (founder of Nvidia) presents the GPU powerhouse's accomplishments and plans. Not to be outdone by Tesla, here Huang unveils Nvidia's new Orin self-driving processor capable of 200 trillion operations per second.

While I'm a great Tesla fan, I don't think individual car sales are the quickest path to L5 SDCs. I think taxi services like Waymo will get there first. (Nonetheless, I'm delighted when TSLA stock is driven ever higher by short-sellers getting squeezed. (I briefly shorted unknown bookseller Amazon in 1998 — big mistake!) Elon Musk's jump starting the entire EV industry is a massive contribution.)

During the next decade or two, the sensor suites and downstream hardware will be rapidly changing. A fleet of taxis that can be upgraded en masse might be advantageous as new sensors and GPUs become de rigeur. The sensors (classically, Velodyne LiDARs) are also apt to be prohibitively expensive before the industry slowly converges on a consensus and brings the prices crashing down. Every multifold increase in data rate (resolution times refresh rate) and adoption of new sensor modalities (maybe even thermal, telescoping zoom, saccading — who knows?) will require swapping out sensors and processors. That's easier to do with a managed fleet. (Of course, at some point the car may just bring itself in for an upgrade.)

Lyft and Uber are desperately keen on autonomous taxi service, since most of their revenue is now consumed by payouts to drivers. (I'll leave it to Andrew Yang to address the resulting unemployment. (His solution is universal basic income.))

But besides cars, there are several other markets for AVs: long haul trucks, slow moving trams in gated communities and campuses, package and pizza delivery, etc. In 2020 Hyundai and Kia announced a $115M investment in Arrival, a British electric delivery van maker. Here's another hundred billion dollar market — smart AI-based traffic lights. Get rid of those insane delays at red lights.

by Matt Herring for The Economist

If CNBC, Bloomberg, or IEEE Spectrum had existed 540 million years ago, their headlines would've been about the Cambrian Explosion, the advent of new body plans and new sensors in Earth's oceans. The winners were the animals that most speedily evolved the most accurate eyes.

That's exactly our situation now. Those trillions of dollars of invested capital will drive a speciation of body types of cars and hone their sensor suites. Evolution will happen through cut throat competition (and replication of successful plans) just as it did in Earth's primordial seas.

The tech that evolves will leave no corner of our lives untouched. Through improved sensors, AI, and robotics, it will permeate the global supply chain and manufacturing in a virtuous cycle that will touch all branches of science and engineering.

Welcome to the Brave New World!

published: 23 December 2019

updated: 2 March 2020

I welcome substantive comments on all my articles. With your permission I may include excerpts. You can post here anonymously, but to do so you should have a verifiable identity, eg a website, Linked-in ID, or Facebook page, etc. Mail comments to bob AT bobblum DOT com (with the usual syntax.)

December, 2019 — Many thanks for praise received from David Eagleman, Steve Omohundro, Alan Finkel, David Winkler, Bill Softky, and from Marci H, Cindy A, Scott A, Richard H, James P, John G, Rosie R, Janet M, Peggy P, Trudy R, and Rod M.

28 December 2019 — from Marc S.

WOW!! An absolutely fascinating overview of the status/trajectory of SDC development and the critical underlying technologies! I started to quickly scan the beginning/end (as I too often do) and wound up reading it straight through. I couldn’t put it down. In my opinion this deserves wide circulation in the popular press. Your very clear explanations and writing style make it easy to understand the basic framework of complex topics and skip the details for the non-tech inclined. I’ll forward this on to many of my friends. Congratulations on a wonderful, exhaustive paper.

29 December 2019 Andrew L. sent this hauntingly beautiful song that sets to music wonderful lyrics from Rudyard Kipling's Hymn to Breaking Strain that celebrates the essential role human error has played historically in engineering accomplishment.

The careful text-books measure – Let all who build beware! – The load, the shock, the pressure material can bear: So, when the buckled girder lets down the grinding span, The blame of loss, or murder, is laid upon the man; Not on the Steel – the Man!

But, in our daily dealing with stone and steel, we find The Gods have no such feeling of justice toward mankind. To no set gauge they make us, for no laid course prepare – In time they overtake us with loads we cannot bear: Too merciless to bear.

The prudent text-books give it in tables at the end – The stress that shears a rivet, or makes a tie-bar bend – What traffic wrecks macadam – what concrete should endure – But we, poor Sons of Adam, have no such literature To warn us or make sure!

We hold all Earth to plunder – all Time and Space as well – Too wonder-stale to wonder at each new miracle; Till in the mid-illusion of Godhood 'neath our hand Falls multiple confusion on all we did or planned – The mighty works we planned.

We only in Creation – how much luckier the bridge and rail! – Abide the twin damnation: to fail and know we fail. Yet we – by which sole token we know we once were Gods – Take shame in being broken, however great the odds – The Burden or the Odds.

Oh, veiled and secret Power, whose paths we seek in vain, Be with us in our hour of overthrow and pain, That we – by which sure token we know Thy ways are true – In spite of being broken, or because of being broken, Rise up and build anew. Stand up and build anew!

Dr. Alan Finkel is Australia's Chief Scientist and served as Chancellor of Monash University from 2008 to 2015. He has recently led a successful campaign urging Australia's government to develop a large-scale hydrogen fuel economy.

In early January, 2020 Dr. Finkel wrote to this website:

Wow, Bob, what an incredible website you have compiled. I glanced through your article on SDCs – impressive considerations.

(excerpted) ... if hydrogen is used as a fuel, there are no carbon dioxide emissions. None. So, clean hydrogen will be part of (but by no means all of) the transition to a zero emissions energy future.

After 11 months, on Friday 22 November 2019, as Chair of a special review, I presented a draft Australian national hydrogen strategy to the federal and state government energy ministers and they adopted all 57 strategic actions without any changes! It is available here. The Commonwealth government followed up by announcing a $370 million hydrogen stimulus package, here. A short video about the strategy can be watched here. . And an article by me in The Conversation was published the following Monday.

(RLB) I respond:

I'm delighted at the reception your work received by Australia's government. BUT...

(please note: I'm a beginner on H2 fuel cells.) My main recent evidence base on H2 has been from YouTube, eg from Real Engineering. Are his arguments valid? For example, is it true that efficiency of H2 systems is inferior to that of lithium batteries, and (please remark on the) challenges inherent in required widespread adoption of H2 storage infrastructure, etc.

Dr. Finkel responds:

It is true, hydrogen production and uses have high losses, but if the production is from solar and wind electricity there are no nasties associated with those losses – just cost. The role of hydrogen is seen simplistically by some as a battle against batteries for powering passenger cars. However, the promise of clean hydrogen is much greater. It has three broad classes of use:

On Feb 3, 2020 Walter Cox wrote:

... I have several friends who are experiencing age-related disabilities and who hope for autonomous vehicles in the short term. In that regard, your piece strikes a nice balance between hopefulness and pessimism ...

For myself, I have found the newly available driving aids (automatic braking, adaptive cruise, blind-spot monitoring systems…) both useful and frustrating. Driving at night on a recent cross-country trip, automatic braking meant my car decelerated far sooner than I would have on my own – I saw brake lights, yet at 80 mph could not discern the rate of deceleration of the car ahead as readily as my radar-equipped system. ...

I have noted the Tesla Autopilot fatalities as they have occurred. First up was the May 2016 Florida event where the Tesla’s Autopilot system failed to note a semi-trailer crossing its path and continued at full speed, shearing its top. Then there was the March 2018 case with Mountain View engineer Walter Huang. Huang’s Tesla killed him when its Autopilot system decided that errant lane markings should rule over the presence of a concrete traffic diverter while he was traveling south on Highway 101– little remained of the Tesla, and Huang’s injuries were massive. ...

Tesla’s problematic Autopilot system, which does not officially claim to be autonomous, nevertheless highlights the issues you note in your piece – even rookie drivers would have noticed the presence of a semi-trailer or a concrete barrier directly in their path, and rapid acceleration (what the Tesla actually did at the intersection of Highway 101 and Highway 85) would not have been a normal choice.

I am sure you are fully aware of all this. I mention the Tesla Autopilot fatalities because they stand in stark contrast to Cadillac’s less ambitious hands-free SuperCruise system, which is intended to operate ONLY on carefully mapped interstates, notably without lane-changing capability. Several million miles so far with no problem, and a close relative of advancing years is seriously considering the purchase of a Cadillac CT5 specifically to take advantage of its freeway-only SuperCruise system.

Thanks again for sharing your most recent work!

(RLB) I responded on Feb 10, 2020:

... My main personal interest in researching this article was really to try to answer for myself when Level 4/5 would actually arrive. It'll be a huge milestone for AI. You may have noticed that MIT Roomba roboticist Rod Brooks thinks it will be 50 to 100+ years. I love the SDC task because it might just be cracked while you and I are still alive. Cracking machine vision is really daunting (but evidently just so tantalizingly close that VCs and car companies are willing to pour tens of billions into it.)

On Feb 11, 2020 Walter Cox continued:

I absolutely agree that L2/L3 has the fatal downside of inducing a false sense of safety. Ultimately Walter Huang was responsible for trusting his Tesla’s AutoPilot system too much; perhaps he assumed he could intervene quickly enough if the system erred, which did not turn out to be the case.

When I turn over limited functions (following distance, road speed) to my adaptive cruise system, I assume the same, however I am still actively engaged with the task of driving and the automatic responses that I built into my psyche (over the past 60 years, since I began driving country roads at age 12) remain in force ...

What I see in contemporary society, and an overwhelming percentage of working engineers are now at least a generation behind us, is a dangerous lack of conservatism. They revere the “new” and too readily abandon “tried and true.” We are all responsible for this change in attitude, since we are constantly demanding new; this makes tried and true products and methods economically unsustainable – perhaps prematurely. Much of what now constitutes the physical world lacks durability; it especially lacks repairability. San Francisco architects/engineers seemingly have forgotten how to build adequate foundations, as Millenium Tower continues sinking (and, more ominously, tilting), due to differential settling of its untested foundation design. How to repair it ?– no one really knows. ...

I think contemporary engineers trust technology too much, and many seem to have no real appreciation for “fail safe” strategies that leave ultimate authority in human hands. The Boeing 737 MAX debacle points this up: Boeing failed to incorporate redundant sensor systems in their horizontal stabilizer trim flight control systems, and Boeing’s engineers considered their engineered system more reliable than human pilots. This led to their initial decision not to train pilots in how to disable the system should it malfunction.

My automatic response of hitting the brake disables any cruise control system – old school or adaptive; such an intuitive disabling approach would have saved both Lion Air Flight 610 and Ethiopian Air Flight 302, along with their 338 passengers and crew. Yet Boeing’s 737 MAX mechanical/software failures, combined with lack of pilot training, meant pilots were unable to overcome the repeated actions of their on-board automated horizontal stabilizer trim flight control actuators, which drove both planes into the ground.

Most of our contemporary systems (mechanical, technological, economic…) seem fragile to me. I suppose only some massive failure will lead contemporary engineers to adopt a more conservative approach.

7 Dec 2020: I just watched an excellent 60 Minutes segment on driverless trucks. It's online but behind a (free) CBS paywall. But, here's a transcript of the show, which aired in August, 2020. The show features the self-driving efforts of Starsky Robotics and those of TuSimple. They expect to have unmanned demo big rigs on the road in 2021. These autonomous trucks will eventually put millions of truckers out of work. This was part of the motivation for Presidential candidate Andrew Yang's advocacy for Universal Basic Income (free money.) Search YouTube for "self-driving trucks." There are dozens of worthwhile video reports.

7 Dec 2020: Tesla stock hits $645 post-split (that's $3,225 pre-split.) It's a blood bath for short-sellers.

11 Mar 2021: To put passengers at ease, future driverless cars may want to take a tip from Johnny Cab seen here — adding a little light banter.

30 Mar 2022: TechCrunch reported today that Waymo is testing full self-driving — NO safety driver — in San Francisco for its employees. This Waymo video features the SF rollout. My hat's off to Waymo, if this includes rush hour operation across Market Street.

2 April 2022: This Full Stack Economics article clarifies Waymo's new robotaxi service in San Francisco. The map perfectly explain why Waymo hasn't expanded faster. The crucial fact is that SF's downtown is excluded. Driving downtown during rush hour in SF (or in any big city) will be beyond the state of the art for the next several years. The author also makes an important economic point: people mainly hire taxis in high density areas like downtown not in the suburbs (where it's relaxed driving for autonomous vehicles.)

16 May 2022: Here Financial Times journalist Peter Campbell interviews Elon Musk about Twitter, Tesla, and Trump. Campbell is a superb interviewer: brilliant questions, concisely stated. The news is that the Twitter deal will take at least two more months to complete (if ever.) Trump has said he doesn't want to use Twitter (which I don't believe for a minute!) Elon would allow him back on. In re: Tesla. Musk is still pissed off about the Bay Area's Alameda County's restrictions (which helped propel him out of the Bay Area and to Austin, Texas.) (I vote Democrat, but sometimes the Left can be idiotic.) First trip of Starship to Mars (uncrewed) will not occur until 3-5 years have elapsed. Musk on VTOLS (flying cars): forget it! (too noisy, too blowy, no good in bad weather, too dangerous.) Musk prefers tunnels - lots of them! (And, many more juicy tidbits.)

17 May 2022: CNBC reports that Ford-backed robotaxi start-up Argo AI is ditching its human safety drivers in Miami and Austin. This service is only for Ford employees. But, it is in heavily trafficked areas of those cities and with no safety drivers. Real progress!

18 May 2022: Tesla is removed from the S&P ESG 500! Again, I vote Democratic, but this is an example of gross stupidity from the Left. See Elon's tweet about "phony, social justice warriors." I'm always in favor of equal opportunity, but removing Tesla from the list of environmentally motivated companies is flat-out stupid! It makes the ESG 500 list completely meaningless.

2 June 2022: CNBC reports that Cruise gets green light for robotaxi service in San Francisco. And, here's roboticist Rod Brooks' largely positive reaction. But still, Rod says that it's just "minimally viable."

17 October 2022: Here's an amusing and informative video on Tesla FSD BETA obstacle testing . I enjoyed blogger Steven Loveday's comment about the testing regimen involving using his wife as a hidden obstacle in the path of the speeding Tesla. "Don't worry, fans! I got a new insurance policy on my wife!" (She survives the test.)

26 October 2022: I told ya! Self-driving is hard (level 5) and is at least a decade away. Here, backing that up is this in Techcrunch: Ford, VW-backed Argo AI is shutting down. And, they're taking a 2.7 billion dollar write-off and laying off hundreds of employees. They're shifting their focus to (yawn) driver-assistance.

27 October 2022: And, more from Techcrunch today: Self-Driving Cars Aren't Going to Happen.

1 December 2022: Here's an update on self-driving in SF: Cruise's Robotaxi Revolution is Hitting the Gas in San Francisco. Basically it's now a two player contest: GM's Cruise and Alphabet/Google's Waymo. Teenagers intentionally walk in front to get them to stop. (That would seem to require a non-lethal weapon like water balloons or snowballs — or a report operator yelling "I'm going to tell your mother!")

3 March 2023: This is an excellent update from BBC News: Robotaxi tech improves but can they make money?

The article reports on the battle for the streets of San Francisco between General Motors Cruise division and Google/Alphabet's Waymo division. Neither company's cars go into downtown (way too tricky) and Cruise currently operates only from 10 PM to 5:30AM. Both companies offer rides to the public but you've gotta get on a waiting list to try it.

Companies have plowed over $100 billion dollars into self-driving, but it's harder than anticipated. (If you're read this article from the top, you know what the obstacles are; they're formidable. Full self-driving is tantamount to AGI (artificial general intelligence.) Unlike ChatGPT, self-driving cars should ideally be conscious.

30 March 2023: According to this report from TechCrunch, Waymo has retired its self-driving Chrysler Pacifica minivan. This appears to be a cost-saving move as Google's Waymo division consolidates its self-driving efforts around its all-electric Jaguar I-Pace cars. The service operates in the suburbs of Phoenix, Arizona (ideal weather and broad non-chaotic streets.)

31 March 2023: Recently Bill Gates was Blown Away Riding an Autonomous Car thru London's Chaotic Streets. The meat of this is the embedded YouTube; great fun! Here, Bill rides with the car's software developer, the CEO of Wayve, and with a safety driver (with her hands ready to grab the wheel.) This is impressive stuff, although we are not shown the several disengagements that are mentioned. Navigating London traffic is far more challenging than driving through Phoenix.

15 April 2023: Here's an incredible claim from GM's CEO Mary Barra: GM's Cruise on Track for One Billion Dollars in Revenue by 2025! If so, this is incredible progress by GM. The current Cruise AV is a modified Chevy Bolt EV including steering wheel and pedals. But, the company soon aims to deploy its Origin vehicles with no steering wheel, pending approval by the NHTSA.

15 July 2023: Here, blogger channel "Asymmetric Investing" reports on impressive progress by GM's Cruise Division: Tesla Isn't Leading Autonomous Driving: Cruise Is. One crucial metric that he reports is miles driven between disengagements. (A disengagement is when the safety driver takes the car off of autonomous mode and puts it into manual mode.) Every month Cruise's disengagements are dropping rapidly, as its performance improves.

23 July 2023: We Put Waymo to the Test at Some of San Francisco’s Trickiest Intersections. I had to read that one. Waymo is now operating 200 driverless cars in SF and driving everywhere except the touristy areas in the northeast (Nob Hill, Marina, Tenderloin.) There are 80,000 people on the waiting list wanting to sign up.

10 Aug 2023: The Washington Post reports that California just opened the floodgates for self-driving cars. The article reports that the California Public Utilities Commission just approved permits for Waymo and Cruise to operate cars on San Francisco streets 24 hours per day,7 days a week. The vote was 3 to 1 after a heated seven hour meeting.

25 Sept 2023: I'm far more optimistic about exciting innovations in technology than I am for innovations in humanity. So, I wondered about riders in self-driving cars vandalizing the cars. Who better to ask than Claude 2, the latest LLM chatbot from Anthropic. Here's how Claude answered my question about inward-facing cameras.

Based on my knowledge, most self-driving car services do not currently employ inward-facing cameras to monitor riders during normal operation:

- Companies like Waymo, Cruise, Argo AI, Motional and others have generally not indicated use of inward-facing cameras in their rider compartments.

- Inward-facing cameras would likely create privacy concerns amongst riders and the general public.

- Ridesharing services like Uber and Lyft also do not appear to use inward-facing cameras for regular riders based on their public policies.

- Some testing vehicles may have inward-facing cameras for data gathering purposes during development, but these are not intended for monitoring riders long-term.

- Cameras focused on the driver compartment are more common in prototypes to monitor driver attention and readiness to take over control when needed.

- There are no widespread reports or evidence of production autonomous vehicles using inward-facing cameras during normal passenger operation at this time.

Of course policies could change in the future, but for now it seems most companies are avoiding inward-facing cameras in rider compartments for privacy reasons and to build public trust in self-driving services. The rider experience appears focused on comfort, amenities and productivity rather than surveillance.

16 December 2023: Two months ago a woman in San Francisco was crossing the street and was hit by a passing car (driven by a human driver). In the collision she was thrown into the path of a driverless car. The human driver that hit her then took off (a possible hit-and-run) leaving the woman pinned underneath Cruise's autonomous car. Here in this newscast, "Cruise says it's not at fault for woman injured in San Francisco crash." ...)

Obviously, this incident underscores the continuing need for safety improvements in self-driving cars, but it seems to me that the California DMV's reaction (shutting down Cruise's operation in California) was heavy-handed and works against that improvement.

Where's the indignation about the human driver who committed the hit-and-run in the first place? (That's what SDCs are intended to make obsolete.) I'm guessing that if the second car had been driven by a human that the pedestrian would still have been pinned. It's just physics (although an attentive driver might've stopped sooner depending on the speeds involved.

This condemnation is the typical "specieism" that I see whenever AI's are judged. I'm not sure about the future, but in 2023 it's humans that are ruining the planet and killing millions of other humans, not the machines.

21 Dec 2023: Here, Ars Technica reports 7.1 million miles, 3 minor injuries: Waymo’s safety data looks good with subtitle: Waymo says its cars cause injuries six times less often than human drivers.

This substantiates my point above in regard to Cruise's plight in California. Halting Cruise's operation will reduce the rollout of self-driving tech and thereby harm consumers.

28 March 2024: I've followed AI researcher Brad Templeton's work for a couple of decades, and he really understands the issues. In this article in Forbes, Waymo Runs a Red Light, Brad brings us up to date on a few of the recent accidents involving self-driving cars. Their accidents are few and far between but the ones that are bizarrely different than human accidents always get the headlines. An example is the one cited above that should not have been a reason to shut down Cruise's entire fleet.

7 April 2024: China's Real Estate Collapse Has Only Just Begun: this is a spectaculary good update and summary of China's economy by macro-economist Brian McCarthy. Basically, absolutely everything in the PRC is paid for directly or indirectly via China's central bank, which (similar to the US but on a far grander scale) just keeps printing money to pay for everything: real estate, infrastructure, Belt and Road, and tech development. I love his quote: "a rolling loan gathers no loss." (until it doesn't.) China is one giant Ponzi scheme.

But, there may be one upside to Xi Jinping's printing trillions of yuan to support the manufacturing of millions of electric vehicles. The atmosphere (and global warming) doesn't care whether the cars are made by Geely and BYD (which are massively subsidized) or by Ford and GM (or by Tesla). There just needs to be fewer gas guzzlers.

(Substantive comments may be emailed to bob AT bobblum DOT com. With your permission excerpts may be posted here.)