2025 Note: I'm actively updating this section on AI and robotics, but that mainly concerns my "Notable Links" section at the bottom. The top half of this section is an index to the articles I myself have written over the years and is ordered according to some combination of recency and importance. (My 1970s work on the RX Project at Stanford is still important. (Nothing like it has been done since.)

The fields of AI and robotics are advancing so rapidly that it's hard to keep up. (And, forget trying to do humanoid robotics without AI/ machine learning.)

ChatGPT, GPT4, and other large language models (LLMs) are a huge leap forward on the inevitable path to human-level AI. OpenAI's ChatGPT scored 100 million signups in 60 days. Microsoft has put 11 billion dollars into OpenAI (market cap 40 billion dollars.) Google and Meta (Facebook) with competing LLMs are hot on their heels. Apple will soon join the party.

GPT4 scores in the top 90% on a simulated bar exam. It also aces programming problems scoring in the top 10% on coding problems given to Silicon Valley applicants.

But, it seems that it's SCARY GOOD! The Future of Life Institute circulated a petition calling for a 6 month halt on the further rollout of LLMs. It's been signed by some top AI researchers and tens of thousands of others. Here I weigh in on the petition. I view the expressed concern as well-motivated. But the proposal is infeasible and impossible to monitor.

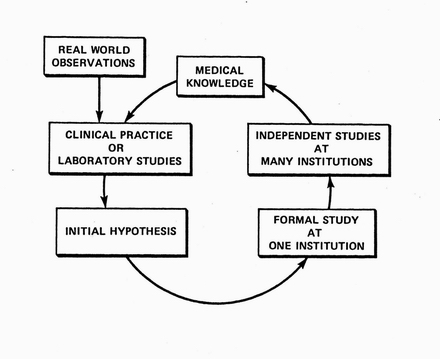

A computer, tirelessly combing through patient records, looking for new medical knowledge — that was my 1981 Stanford PhD thesis project — the first example of data mining under autonomous AI control.

Under development at Stanford from 1976 to 1986, RX won many awards and contracts and was presented worldwide.

Who else would've been foolhardy enough to try this in the era when CPUs were crawling along chugging data on mag tape? Now, everybody's in the machine-learning and big data game. It's still not easy!

I bought an Oculus Quest 2 in November, 2021. I love it! Here are my "excellent adventures" with this must-have VR headset. (BTW, That's a picture of blogger Nathie — I'm fifty years older.)

Even if you're a senior (age > 39,) VR is a great place to hang out while Covid burns itself out. Here I describe the games and apps I've tried on Facebook's (now Meta's) Oculus Quest 2 headset. I especially enjoy apps for dancing like Synth Riders and Beat Saber and apps for boxing (Creed and Thrill of the Fight) and parkour (Stride.) But my top pick is Eleven, a table tennis (ping pong) game with stunningly realistic physics. What I really enjoy is chatting with opponents from around the globe as we play.

Self-Driving cars (SDCs) will be the most important drivers of machine vision, neural network architectures, and AI processor hardware over the 2020s and 2030s. The case boils down to money and technology acceleration. Money (hundreds of billions of dollars in R&D) will be invested in the 2020s. That money will translate into a hundred thousand engineering jobs worldwide as companies and countries in Asia and the West compete for dominance. This article is a heads-up display of that thesis and its ramifications.

Since my days as a math/cog sci undergrad at MIT, I've been interested in two questions:

(And, might a "conscious, subjective world" be a necessity for human level intelligence, for machine intelligence, in general, and for self-driving cars, in particular.)

Here, I review the state of the art of autonomous vehicles, their sensors, their CPUs and the companies that make them. Cars may need steering wheels in traffic until the mid 2020s. But, I think by the late 2020s we'll be able to throw them away.

Living in Silicon Valley, I'm used to watching Google's self-driving cars dodge me as I ride around town on my electric bike. But, are they conscious?

No (not yet!) But, here I address what it will take to make them conscious — and, why would you bother? Also, what's the difference between machine vision and mammalian visual perception? Led by advances in neuroscience, computer vision researchers are rapidly accelerating (but have quite a ways to go.)

These are my detailed notes on the many lectures I attend every week at Stanford. These frequently feature cutting edge research by our faculty, students, and visiting superstars. My WebBrain contains hundreds of archived lecture notes (last updated January, 2020).) To obtain more recent notes, contact me.

For over fifty years my interest has been driven by just two issues —

I've also had a multi-decade interest in health (especially now in my seventies.) That motivates my close attention to cardiology (eg, Stanford's CV Institute series,) molecular biology, and longevity studies (eg, Stanford's Glenn Foundation series.)

Here's my CV from 1986 when I left Stanford to go back into clinical practice (for twenty years at Kaiser.) Reasons for leaving academic AI:

1) emergency medicine can be a thrill, 2) higher salary, 3) local family ties, 4) love of Silicon Valley, 5) impending AI Winter.

I retired from clinical medicine in 2007 and came back to Stanford (as an Affiliate of the Center for Mind, Brain, and Computation.) I've been a perennial student of cognitive neuroscience since my undergrad days at MIT in the sixties. I continued that interest as a neuroscience MD,PhD student at UCSF, also (after finishing my residency,) as a research associate/ principal investigator in AI at Stanford.

MD/ PhD students, perhaps, daunted by my career switches, occasionally ask me for career advice. Basically, a career choice is constrainted by 1) what you love, 2) your skills, 3) the market for your skills, and 4) where you and your family want to live.

My little one page story showing what real, strong AI will be able to do.

This was my sci-fi reply to the question "when will computers be smarter than humans?"

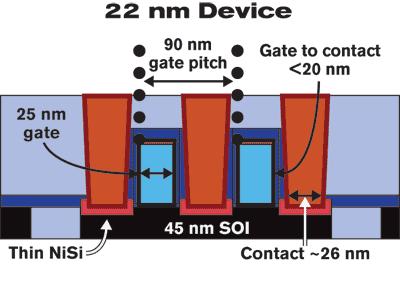

Will Moore's Law soon hit a brick wall? To make sure it doesn't — Cymer, ASML, and Intel have spent billions developing EUV lithography.

Here is the current state of EUV — it's a matter of when, not if.

I wrote this in 2013. For current progress on EUV go to YouTube (that's how I stay current.) Since 2013, ASML (which used to belong to Philips) bought laser light source company Cymer (still in San Diego.) And, EUV is mainly used to great advantage in Taiwan by TSMC. Intel, having focused for years on financial engineering, is now struggling.

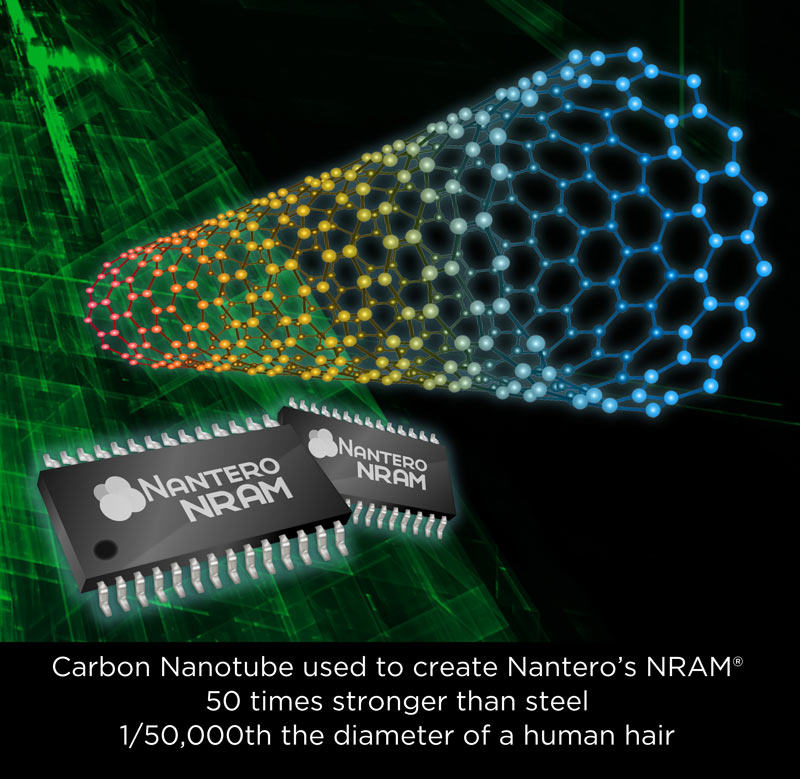

2025 update: this article is archival only. NAND Flash has been spectacularly successful. But, Optane (from Intel) is dead and so is Nantero.

Although NAND flash has been a spectacular success (as in Amazon's best selling Samsung 850 Evo SSDs,) two new technologies could soon eclipse it, and even compete with DRAM in speed.

In the works for over a decade, startup Nantero's carbon nanotube (CNT) NRAM (non-volatile memory) will finally hit the market in 2018. With a fresh infusion of $21 million dollars for further development, their CNT NRAM has been licensed by several manufacturers. This will truly be a ground-breaking advance.

Intel and Micron made big waves in 2015 when they announced their 3D XPoint tech. It will be marketed starting in 2017 as Optane, initially for servers and subsequently for high-end gaming PCs.

Both of these new memory techs may help usher in a new world of inexpensive genomics, cutting edge brain simulations, and autonomous vehicles.

Recently the (Ray) Kurzweil Accelerating Intelligence (KAI) newsletter ran a major article by Lt Col Peter Garretson (US Air Force) entitled What our civilization needs is a billion year plan.

Here's what made me bristle in that article: 1) strong advocacy of manned space programs, 2) using those programs to rescue humanity, and 3) pushing the notion of trillions of humans spreading throughout the galaxy.

My rebuttal in KAI argues that 1) manned missions, costing 100X the price of science-based launches, are a waste of precious NASA resources better spent on robotic probes and rovers, 2) humanity is already choking off the biosphere of Earth - we don't need trillions more in space, and 3) humanity is a stepping stone to the profound intelligences that will emerge within the next century or two and that will be the great engineers and explorers of space.

Meanwhile, let's focus on sustaining humanity's home right here on Planet Earth. With luck, we will be able to enjoy life here for many generations to come.

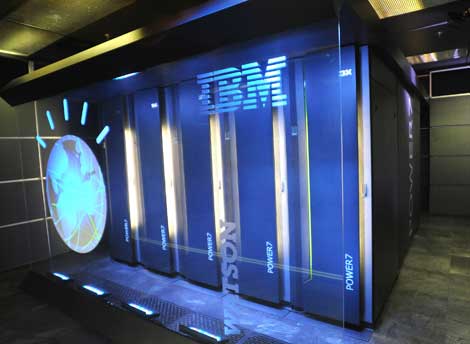

Beating Jeopardy! was a stunning victory for IBM's Watson and its DeepQA architecture. It was headline news in 2010. My writeup (voted the best on the net by Quora ) provides links to the best online articles and videos, and summarizes the project's key AI components. Watson was a milestone accomplishment that will lead to cheap, widely available QA systems. It's a step toward passing the Turing Test, but not an advancement in perception as were the DARPA Grand Challenge robotic cars. With Jeopardy Champ Ken Jennings, I too welcome our new AI Overlords, but take heart at having a brain that's the equivalent of a server farm but that runs on coffee and donuts.

This is my 2009 book review of Total Recall: How the E-Memory Revolution Will Change Everything, by tech magnate Gordon Bell and his Microsoft colleague Jim Gemmell. They describe a future of total data capture that's inevitable for many of us.

Kevin Kelly is the renowned futurist and founder of Wired magazine. As I looked at the rave reviews for his new non-fiction work, The Inevitable, I wondered, "Are Marc Andreessen, David Pogue, and Chris Anderson just giving the Senior Maverick at Wired his proper obeisance?"

No! This is another home run (as was What Technology Wants) — another magnum opus — this time addressing the phase shift in civilization signaled by the amalgam of internet + seven billion souls.

This is my book review of Kevin Kelly's 2010 magnum opus What Technology Wants. Although I disagree with his view of the benign nature of technology, this is an important book that I whole-heartedly recommend.

My figure of merit for books and movies is number of re-reads or re-watches.

This book gets regularly re-read. (My favorite movie list includes Avatar and the recent Star Trek: Into Darkness. My son and I had high hopes for the remake of Star Wars, but it was an artistic dud.)

After Microsoft started their forced cram-downs of Win10 in December, 2015, I thought, "Ah, poo-poo — I'll knuckle under." So, I put a blank SSD into my trusty Win7 tower (the case is always open,) and let MSFT overwrite my venerable Win7 (see next story.)

One of the little nagging problems I had was that I couldn't reliably drag the active window with my mouse. So, I swore at it for a week (there were other problems, too) and then I reinstalled my trusty Win7 (by just plugging in another SSD.)

I did ultimately solve the problem. It's easy, but — really? — this should've been cracked in Microsoft user focus groups. How can they ignore their customers like that? (Answer: they've been relying on their monopoly status for decades. Look at the stock chart.) Microsoft's got troubles.

PS: Note to reader: I've been using Win10 for four year now and like it (ie I no long swear at it.) But, as with Win7 below, initially there were problems.

In 2016, as I contemplated my shift to Win10, I'm amused at the thought that I dis-ed the then rock-solid Win7 here in this 2012 piece.

In 2016 at Stanford as Professor Olaf Sporns (of Connectome fame) was lecturing to a packed audience, Microsoft servers tried to do their usual forced update to his laptop. His powerpoints came to a screeching halt, as his SRO audience groaned.

Microsoft mainly caters to their business customers — the ones they really care about — and whether and when they upgrade to Win10 from the (then venerable) Win7.

I have a few old friends who work for the Evil Empire (in research) — so I always dis Microsoft less than it deserves. (Besides, Bill Gates' name is on the Stanford Computer Science building — and the Paul Allen Center for Integrated Systems is close by. Bill (and his late partner Paul) does great philanthropy.) Note that Bill himself gets frustrated by Microsoft's software.

I kind of believe (former CTO) Nathan Myhrvold's explanation of Microsoft's difficulties. They support thousands of different devices — Apple's machines all fit on one kitchen table (as CEO Tim Cook likes to point out.)

In 2016 Microsoft was angering thousands of its customers owing to its nightly Win10 forced cram down. (Like the businesses, I was also locked in with all my networked machines.)

My local Apple Store and Microsoft store are immediately adjacent to one another. These 2016 photos speak louder than words. (Apple has a far more enthusiastic fan base.)

Note: Under new CEO Satya Nadella MSFT has been partially exonerated.

(This is a 2007 letter I sent to MIT's Technology Review responding (in agreement) to an article by Yale's Professor David Gelernter AI is Lost in the Woods.)

In the past decade AI has made headline-generating progress with its self-driving cars, speech-recognizing intelligent assistants, and robots.

Much of that progress in AI and machine-learning has resulted from the adaptation of neural network models.

But, if you think human-level intelligence is just around the corner, you've been misled.

Much of the brain is still terra incognita, and some of that detail may be required to replicate human consciousness.

My review of Intel's new (April 2011) 320 series, solid state drives (SSDs). They're awesome.

2016 update — I now prefer Samsung EVO SSDs — apologies to my friends who work at Intel. Here's part of what sold me on Samsung SSDs.

The Sammies are cheaper — $86 at Amazon — and their Data Migration software backs up your drive in minutes, even while your computer's on.

If you're still using a rotating hard-drive, you may also want to get this home computer.

This is a chapter-length postscript to a science fiction novel I was writing in 1995. It incorporates elements of the Lifeboat Foundation (not then in existence) and the Millennial Foundation.

This work introduced the noosphere, the ocean of knowledge in which humanity dwells — a theme central to the prescient Teilhard de Chardin, and his disciple, Fr. Thomas Berry.

One of the next big steps in evolution will occur when the AIs master all the knowledge on the internet.

I think that may occur before 2100 but that depends on Moore's Law continuing. It also assumes that mankind does not self-destruct.

A brief bio I wrote a decade ago about my life-long interest in the mind/body problem.

Woody Allen would say, "which is it better to have?"

A chapter length autobiography of my interest in the mind/brain and software/computer relationship

I was writing this for a book. My current view is that books are almost obsolete.

Why buy a book when you can read stuff free on the web? (Also, who cares about biographies? (My favorite is Lytton Strachey's bio of Queen Victoria. Like Victoria, I favor rule by monarch.) And, the public's attention span is at most a month. As an author, why bother?)

As an old guy, however, all my life I've enjoyed reading a book at bedtime (and actually turning the pages.) My current favorite bedtime reading is Ashlee Vance's bio of Elon Musk. It merits an A+. I also love all of Steve Pinker's books.

(Another chapter length essay I wrote a decade ago on the power of knowledge. (Some of my assertions remain valid even after my latest epoch of fascination with neuroscience.) Yes, intelligence is multi-dimensional — social, emotional, motoric, spiritual — but that's later.)

TED is the annual conference of the tech cognoscente. Fortunately, their fifteen minute talks are all available. (I originally wrote this paragraph in 2007 before TED was so widely known.) Here are a few of my favorites — just updated for 2018. )

In 2012 while attending the University of Arizona's Towards a Science of Consciousness, I shared several meals with TED's owner/organizer, economist Chris Anderson — getting the inside scoop. Chris is the brilliant, low-key guy who asks the questions after many of the best talks.

Kevin Kelly is the founding executive editor of Wired Magazine. In 2008 Kevin posted an elegant article on Evidence of a Global SuperOrganism. It posited four assertions: the Web is 1) a manufactured superorganism, 2) an autonomous superorganism, 3) an autonomous, smart superorganism, and 4) an autonomous, conscious superorganism.

My response provides evidence that the Web is becoming exponentially smarter. A quantum leap will occur when it can read, synthesize, and learn from its exabytes of content.

Conscious awareness is entirely distinct and is a huge mystery. Awareness seems to be characterized by the large scale, multi-modal integration of perceptions of self and environment. All mammals are conscious (and probably other chordata and even some invertebrates). In 2008 I reviewed Gaillard's research on gamma oscillations, one possible telltale sign.

My detailed lecture notes appear above.

The Singularity Summit used to be one of my favorite conferences.

(Now, I consume a steady diet of the subdomains (neuroscience, AI, molecular bio, nanotech.)

In 2010 it was held in San Francisco and included a mix of well-known singularitarians (Ray Kurzweil, Elie Yudkowski, and Ben Goertzel): neuroscientists (Brian Litt, Terry Sejnowski, and Demis Hassabis) psychologists(John Tooby and Irene Pepperberg); computer scientists (Shane Legg, Steve Mann, David Hanson, and Ramez Naam) and biologists (Greg Stock, Lance Becker and Dennis Bray).

Here's a report on the 2012 Summit.

Manna is a poignant dystopian work of social commentary in the tradition of Brave New World and 1984. Written by Marshall Brain (inventor of HowStuffWorks) and freely available on the web, it deserves a wide audience.

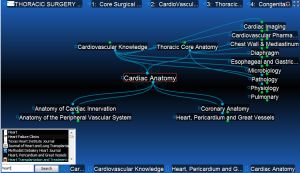

This is an unsolicited, unpaid testimonial for TheBrain, a software program I've used daily for a decade to keep track of everything.

WebBrain is the server-based version of TheBrain that I use for sharing the public half of my brain. Most notably, WebBrain is where I upload and store my hundreds of detailed Stanford lecture notes. Court-reporter style, I've got meticulous notes on all our neuroscience, psychology, and AI superstars. Those fields have increasingly essential overlaps. Here's a way to keep up.

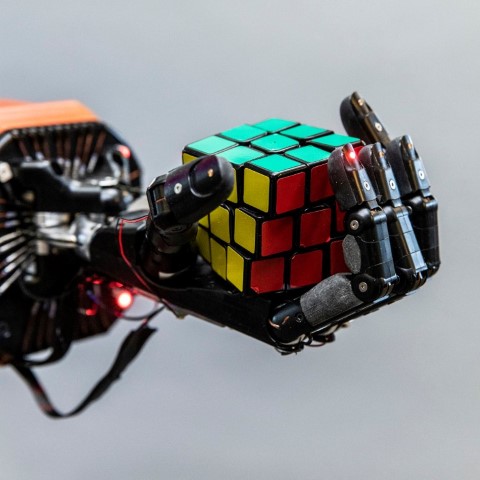

This clip shows what you can achieve when you combine Honda's million dollar humanoid robot ASIMO with Takeo Kanade's outstanding computer vision group at CMU.

ASIMO on Wikipedia. Here ASIMO is running, and here it is learning identities of objects.

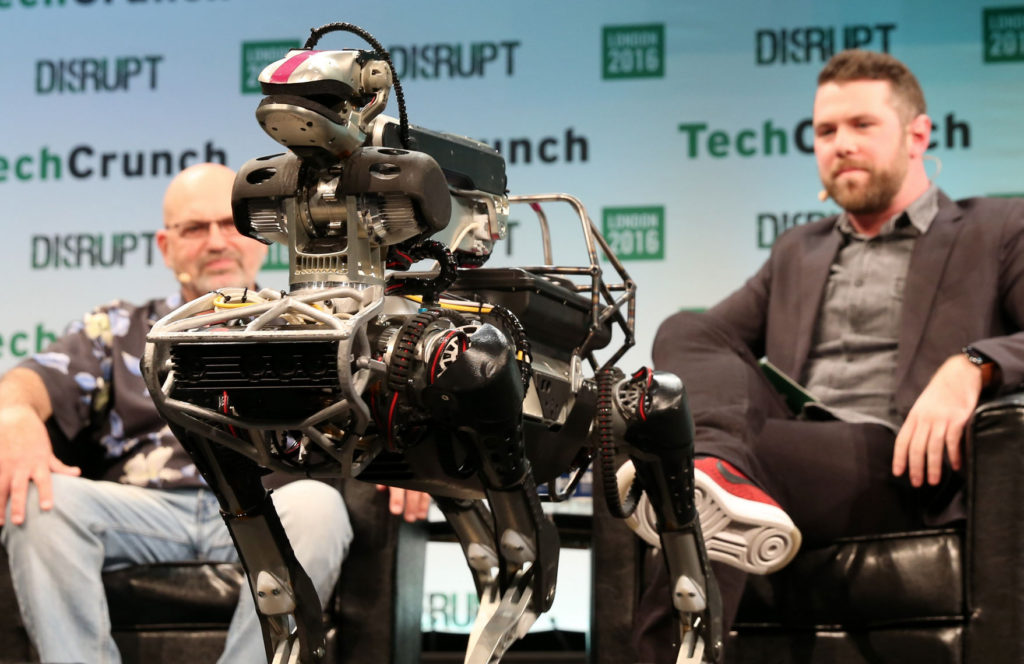

In 2017 news that rocked the robotics world, Boston Dynamics was sold by its owner, Google, to the Japanese multinational, SoftBank. Boston Dynamics and its founder, Marc Raibert, stand head and shoulders above everyone else in the world of legged robots.

While they're most famous for their legged robots, Boston Dynamics has also recently developed a wheeled robot, called Handle. I mention it, because today's big news in robotics (April 2, 2019) was Boston's acquistion of Kinema Systems, which specializes in computer vision and gripper systems for warehouse logistics. Here's a great video of Handle wheeling around a warehouse stacking boxes. (I love those heavy, tyrannosaurus-like counterweights.)

In 2011 PETMAN was Boston Dynamics most advanced legged, robotic platform... PETMAN

I had to laugh at the company's blurb stating that it would be used to test clothing durability for the Defense Dept. That's akin to saying that 10,000 years ago Homo sapiens' outstanding distinctive feature was its ability to model animal skins.

Also look at Boston Dynamics Cheetah robot running at 28 miles/hr. The company was founded by Marc Raibert, who spun it off from MIT in 1992. Here is Marc at Stanford CSD explaining the challenges of legged robotics.

I was heartened to see this April 2012 DARPA Challenge Prize for humanoid robots. Also read the DARPA pdf. PETMAN will soon have a more important mission than modeling clothing. The great sci fi author Philip K. Dick.had it exactly right in Blade Runner.

Robots will be the explorers of outer space - not humans. (See this superb collection of hi-res photos of the MSL/Curiosity Rover on Mars.)

Here's Marc Raibert (on our left above) in 2017. In this must-see TED talk, Marc shows his newest robot, Spot, strutting its stuff in an arrestingly fluid, life-like way.

In this TED talk Chris Urmson (former head of Google's driverless car program,) tells us how autonomous cars see the road. But, this is old news.

In 2017 and 2018 Waymo (Google's self-driving division) actually rolled out services in Phoenix. You might be concerned about the accidents (and fatalities) that always splash across the headlines. But self-driving cars can't and won't be stopped. And, here's a more important point.

All the big car companies are working on driverless cars. When this market explodes, that consumer demand will accelerate technology development through the roof — machine-vision, AI, GPUs, flash storage, communications, batteries.) Forget smartphones as a driver of tech! They're so yesterday.

In Kevin Kelly's excellent 2010 book What Technology Wants the central theme is that technology's a never ending cycle of innovation: each new product or idea is built upon previous innovations. So — no electricity without copper wires; no copper wires without copper smelting and wire extrusion. No electricity without coal or uranium or dams; none of those without roads and vehicles.

Willow Garage (WG) was one of the purest examples. WG was specifically designed to address the key stumbling block to robotics. Every new academic or industrial team had to start from ground zero, assembling a suite of sensors, actuators, and controllers. Hence, each new team used to have to reinvent the wheel; their final product would be a demo video: progress by demo. Then, another team would start from scratch. Willow Garage ended that and hugely promoted robotics innovation by making sharing of hardware and software easy. Their main software (still widely used) is ROS (the Robot Operating System) and OpenCV (open computer vision).

WG also created a community of OPEN SOURCE robotics developers who share not only their insights and algorithms but also their hardware and code. A grad student who would've previously had to spent four years building a platform and only one year on his specific innovation is now able to start immediately on his innovation by using the community's previously developed code. Like Wikipedia, Willow Garage unleashed the creative talents of hundreds of new contributors.

.Although WG was only four years old when I wrote this, its results were astounding. Their flagship robot was their PR2 (personal robot version 2). Watch PR2 fold laundry (the holy grail is stuffing a pillow into a pillowcase). See PR2 clean up after the party, or fetch a beer from the refrigerator. Examine PR2's sensor suite. Here, in only five days, the WG team programs the PR2 to play pool. Watch PR2 open a door, find a socket, and plug itself in. However, PR2 really needs a voice like its cousins R2D2 and WallE.

Addendum (2017): Yes, I'm aware that Willow Garage has largely morphed into a collection of robotics startups. I'll update this later.

In 15 minutes this superb video (8 million hits!) by CGP Grey nails the future of human (un)employment, here spelled out in persuasive detail. I enjoy E-biking after the swarms of self-driving cars around the Google campus.

At 95 minutes this is ... worth every second, if you're interested in artificial general intelligence (AGI: intelligence on a par with humans.) It doesn't get any better than MIT Prof. Josh Tenenbaum explaining why multi-layer ConvNets, so called "Deep AI," are only a part of the solution. His entertaining talk (Feb, 2018) shows what two year old kids can do that multi-layer neural nets alone will never do.

2013 will be a banner year for HCI: Human Computer Interaction. (I hear about developments in HCI before they go public in Prof. Terry Winograd's weekly HCI course at Stanford.) Two of my soon-to-be-released favorites are the MYO, an EMG-based forearm band featured above, and Leap Motion, a 3d video sensor.

Leap Motion is similar (but with higher resolution) to Microsoft's Kinect, which will itself have a major upgrade, the Kinect2, in 2013.

This year (2011) at the AAAI Conference in San Francisco, this video won an Oscar (actually a "Shakey") for best demo video. Swarms of robots cooperating to accomplish a task. NASA and scores of other labs are developing swarm robots to explore hazardous environments like the surface of Mars, Titan, or Jupiter's moon, Europa. Bring 'em on. It's time to end domination of Earth by hominids as Agent Smith informs Morpheus in The Matrix.

In this TED video, U. Penn. Prof. Vijay Kumar explains principles of quadrotors, flying in precise formation and cooperating to do construction and mapping.

In 1950 British code breaker Alan Turing set the most famous milestone of AI research - faking human discourse. A computer passes the Turing Test if it can fool humans into thinking that it is human. Kevin Warwick presents a good backgrounder on the Turing Test.

Better still is the the Loebner Prize website, awarding money to the best competitors. But, best of all is ELBOT - the reigning champion. Try it (him?) out!

In 1997 IBM made AI history with its chess playing program DEEP BLUE, which beat world champion Garry Kasparov. In 2010 IBM was again advancing AI by attempting to beat humans at the popular TV game JEOPARDY. IBM's program, called WATSON, was named after IBM's founder. Here is WATSON in action.

If you're really a human, you should be able to distinguish CATS from DOGS, as in this test from ASIRRA. Bots can't do it, yet.

Prof. Andrew Ng presents a masterful one hour video overview of Stanford's STAIR Project. There are faster and more amazing robot demos on Youtube, but this is the video to watch if you are really interested in the intellectual challenges confronting robot builders. (Sept, 2012 update: here is a wonderful 2011, 16 min. overview of robotics and machine learning by Prof. Ng.).

Here, Prof. Fei-Fei Li presents the work of the Stanford Vision Lab to a family audience at the 2012 eDay (Engineering for Kids). Her wonderful presentation hides the incredible complexity of this cutting edge research. Although the computer could not recognize it, a five year old girl in our audience had no trouble identifying the star-nosed mole. Fei-fei's group now holds the record for number of recognizable categories of objects: twenty thousand!

Doug was a contemporary of mine at Stanford. Since leaving about 30 years ago, he has led the longest running, best-funded AI project in history - CYC. His aim is to codify all of common sense, rendering it machine-comprehensible. In this video presented to AI researchers at Google he shows CYC's objectives and methods. (Much of common sense is nonconscious, situational, temporal, and subsymbolic. Despite that, CYC is essential background for AI builders.)

Until 2015 Luke was head of MIRI (the Machine Intelligence Research Institute.) Here he concisely addresses many of the key issues that MIRI studies. What is artificial general intelligence (AGI)? When will it be achieved? Does it require consciousness? Will it be based on whole brain emulation or rather will it involve de novo AI? Will it be friendly to humanity? Are there accelerants and spoilers in its development? Luke worked with many of the key players. His answers are accurate and brief.

AI researcher Monica Anderson has identified a key weakness in traditional AI: the exclusive reliance on symbols, models, and logic to compute the state of the world. Radically different are her model-free methods that use pattern-based computations. This series of videos from her company, Syntience, presents the problems with symbolic AI. Also included are videos by AI luminary Peter Norvig (Dir. of Research at Google) and futurist Jamais Cascio. My video on Consciousness, presented at the Bay Area AI-Meetup, is also included. Consciousness (and intelligence) is the paradigm case of subsymbolic, holistic, pattern-matching. AI must follow suit.

All boys (and many girls) have spent 10,000 hours playing video games by age 21. It causes neglect of school work and disengagement from reality. It promotes lack of physical activity and obesity. It stunts social skills and narrows the player's world. Is there any upside? Yes, says the Institute for the Future's Jane McGonigal. Massive multiplayer games, like World of Warcraft, promote sociability, cooperative problem solving, engagement, persistence in the face of continual defeat, and a sense of empowerment, and even heroism. Now, asks Jane, how do we harness those 10,000 hours to save the planet? Her answer is Urgent EVOKE, one of a series of video games that she is developing. See her present the concept at TED. If you cannot solve the world's problems alone, then EVOKE a solution. Go Jane!

Speaking of heroism, Joseph Campbell in 1949 extracted the formula from the world's classical mythologies. Here is the key to the Hero's Journey (as seen in The Matrix).

Is it possible you're reading this but haven't seen the 1999 film The Matrix? (It is possible. Many of my friends are meditators who hate violence (and tend to be female.) Perhaps they will be more successful in saving humanity from itself than my kind: media-saturated hacker males.) How about the 1999 film eXistenZ? Both movies raise the question, "Is totally immersive virtual reality (VR) possible?" Answer is "No. Brain in a Vat fooled by computer-generated VR is NOT possible." This Rudy Rucker (gonzo fiction/ math whiz) essay eloquently says why. (I'll start and stop here: only 1089 particles in the known Universe - insufficient compute power.)

Speaking of brains in vats: check out this one by AI game builder Chris Jurney. Is it real or virtual? Answer is REAL (ie real plastic).

Some of my friends are head freezers. Eternal life, maybe! Advertising-free, no!

There are two kinds of total immersive reality, and it's essential to distinguish them. You know VR (virtual reality) from films like The Matrix in which an entire fake world is computer-generated. That is not possible. However, the other kind of immersive reality - telepresence, is far more interesting and is quite possible. Telepresence refers to being immersed in a real physical environment that is some distance from you.

Total immersion means the immersion is so complete that you are not aware of your true location.(That was the situation in the sci-fi films Strange Days, Total Recall, Avatar, and Source Code.)

Telepresence in this wiki article is lamely being confused with video teleconferencing. That's like confusing looking at a photo of rock-climbing with actually being on the cliff face. Totally immersive telepresence is the key magic trick behind consciousness. Real immersive telepresence is what you've been experiencing every second of your life from the time you were born. It's like The Matrix only you can't escape. It always amazes me when some of my otherwise brilliant Silicon Valley friends don't get it. (It's like explaining water to a fish or air to anyone 300 years ago. It's invisible but true.) Philosopher of mind, Thomas Metzinger, explains it superbly here.

The crucial bottleneck in scientific progress is the number, brilliance, and resources of research teams on the planet. The pace of science has quickened as more and more countries have joined the enterprise. Another source of new leads is robotic science: eg high-throughput drug screening. Two recent projects, each touted as robot scientists, peaked my interest and reminded me of my Stanford AI research on the RX Project, an early success in automating scientific discovery. The new projects are 1) ADAM - an automated microbiology lab for generating hypotheses, conducting experiments, and interpreting results and 2) Eureqa, a Cornell project focused on automated interpretation of science data. Experiment design and interpretation is essential to human cognition and learning. It's the way children learn about their world, and its automation is an essential but challenging step toward The Singularity.

A regular debate I have with my NASA friends is "exploration of space - human or robotic?" My answer is that humans will explore Mars only after the robots first build the Mars Hilton, an argument elaborated by me here.

This video from Ishikawa Yomura's robotics lab reinforces that claim (2009).

But, to bring you up to 2022, here is the current state of the art in robotic hands: the IDLA Hand and the 2021 Nature paper that describes it.

Tangentially, here are some of my favorite quadrotor (quadcopter) YouTubes. Quadcopter Ball Juggling (also from ETH, Zurich) and quadrotor doing aggressive maneuvers. And this clip of quadrotors grasping parts and four of them autonomously building a tower. And here, from the GRASP Lab at U. Penn, a swarm of Nano Quadrotors in tight formations. But having seen that, what do you now think of the common housefly that you so casually swat?)

March, 2013: Check out SyFy channel's new TV show: Robot Combat League. Larger than life robots in hand-to-hand combat - what could be more meaningful?

A stunning demo from UCSB's Professor and composer JoAnn Kuchera-Morin, who created the Allosphere, a three story tall, virtual reality environment connected to a supercomputer. This wonderful TED video shows the Allosphere in action as it navigates through a brain, inside blood vessels, inside cells, and inside atoms.

Augmented Reality (AR) means overlaying words and graphics on top of the real world. This excellent video from Columbia's AR group shows a marine using AR to fix a tank turret.

It is possible to take augmented reality too far — to Hyper-Reality (OMG!) (That video explains my great joy in spending weeks in the Sierras with only a backpack and no electronics. All that remains is the Universe. )

(You may've already seen this Google video of Project Glass (April 2012) before Glass crashed and burned. Too bad! Now, in 2022, the company formerly known as Facebook (META) rules the VR roost. I currently spend about an hour a day in VR (actually VR² — transformed first by the Oculus Quest 2 and second by my brain.)

When will robots autonomously manufacture their own parts? They already do. Robots vs Humans? Compare the precision of the CNC machine to the "precision" of YouTube comments by humans. Which is evolutionarily more fit?

(BTW, this clip of Peter Weyland's 2023 TED Talk had me - popcorn in hand - enthusiastically awaiting the June 2012 release of Prometheus. Weyland Industries David robot.

Addendum June 23, 2012: Prometheus was a dud - the trailers were much better. You're better off just seeing The Avengers again or Ridley Scott's Blade Runner. Or, see Blade Runner 2049, a worthy sequel.)

I agree with the editors at Wired; this novel is "good — scary good." Don't tell your friends about it unless you know they can afford a few nights of feverish reading. The review by 'TChris' at Amazon is accurate. This is as good as anything Michael Crichton ever wrote.

This project done by Dave Singleton sooo wound my clock that my son (Sean) and I just had to go out and buy an Arduino to replicate it. First, look at the 35 second video at the very bottom of his write-up (not the first clip). Next, contemplate the fact that DARPA, CMU, Stanford, and Google spent millions developing self-driving cars. So, here's Dave doing it with a $29.95 RC car! I got my start at age 11 building ham radio kits. Buy your kids Arduinos - nothing beats "hands on."